[Update: the new release of googleVis accounts for changes in RJSONIO’s handling of backslashes, so you probably won’t need the older version.]

Something has apparently changed in the way RJSON’s toJSON() function works which is causing all sorts of extra escape characters (backslashes) to appear in the googleVis-generated JavaScript, at least when trying to set a visualization’s initial state. This bogus code causes the browser’s JavaScript engine to choke just before it can call chart.draw(), so you don’t see the Flash visualization at all–just a blank space with the pretty footer.

This is at least the case on Mac OS 10.6.7 and Markus Gesmann gets all the credit for tracking it down.

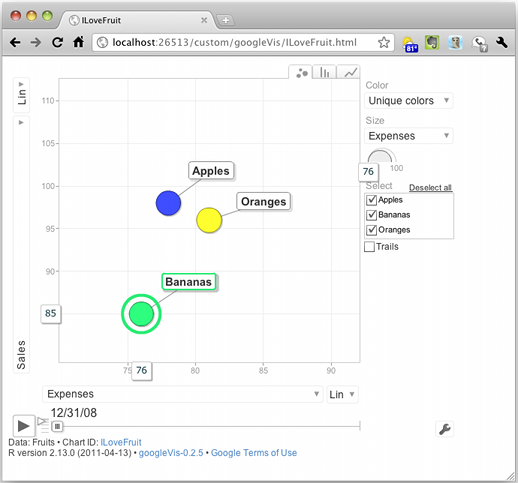

Here’s an example state string which selects a couple of bubbles to be labeled (“Oranges” and “Apples”) and sets the time to start about half-way through:

state.json='{"xAxisOption":"3","xZoomedDataMin":81,"playDuration":15000,"sizeOption":"_UNISIZE","xZoomedDataMax":111,"xLambda":1,"dimensions":{"iconDimensions":["dim0"]},"yZoomedDataMax":91,"duration":{"multiplier":1,"timeUnit":"Y"},"orderedByX":false,"xZoomedIn":false,"yZoomedDataMin":71,"showTrails":false,"orderedByY":false,"iconType":"BUBBLE","uniColorForNonSelected":false,"yZoomedIn":false,"nonSelectedAlpha":0.4,"yLambda":1,"time":"2010","yAxisOption":"4","iconKeySettings":[{"LabelY":27,"key":{"dim0":"Apples"},"LabelX":42}],"colorOption":"6"}'

# create the motion chart

M=gvisMotionChart(Fruits, "Fruit", "Year", options=list(state=state.json))

Here’s the output in question using the current RJSONIO 0.7:

> cat(M$html$chart['jsDrawChart'])

// jsDrawChart

function drawChartMotionChartID6db280db() {

var data = gvisDataMotionChartID6db280db()

var chart = new google.visualization.MotionChart(

document.getElementById('MotionChartID6db280db')

);

var options ={};

options["width"] = [ 600 ];

options["height"] = [ 500 ];

options["state"] = [ "{\\"xAxisOption\\":\\"3\\",\\"xZoomedDataMin\\":81,\\"playDuration\\":15000,\\"sizeOption\\":\\"_UNISIZE\\",\\"xZoomedDataMax\\":111,\\"xLambda\\":1,\\"dimensions\\":{\\"iconDimensions\\":[\\"dim0\\"]},\\"yZoomedDataMax\\":91,\\"duration\\":{\\"multiplier\\":1,\\"timeUnit\\":\\"Y\\"},\\"orderedByX\\":false,\\"xZoomedIn\\":false,\\"yZoomedDataMin\\":71,\\"showTrails\\":false,\\"orderedByY\\":false,\\"iconType\\":\\"BUBBLE\\",\\"uniColorForNonSelected\\":false,\\"yZoomedIn\\":false,\\"nonSelectedAlpha\\":0.4,\\"yLambda\\":1,\\"time\\":\\"2010\\",\\"yAxisOption\\":\\"4\\",\\"iconKeySettings\\":[{\\"LabelY\\":27,\\"key\\":{\\"dim0\\":\\"Apples\\"},\\"LabelX\\":42}],\\"colorOption\\":\\"6\\"}" ];

chart.draw(data,options);

}

And here’s working code from RJSONIO 0.5:

> cat(M$html$chart['jsDrawChart'])

// jsDrawChart

function drawChartMotionChartID47a55df7() {

var data = gvisDataMotionChartID47a55df7()

var chart = new google.visualization.MotionChart(

document.getElementById('MotionChartID47a55df7')

);

var options ={};

options["width"] = 600;

options["height"] = 500;

options["state"] = "{\"sizeOption\":\"5\",\"nonSelectedAlpha\":0.4,\"xLambda\":1,\"iconType\":\"BUBBLE\",\"yZoomedDataMax\":91,\"iconKeySettings\":[{\"LabelY\":-124,\"LabelX\":-160,\"key\":{\"dim0\":\"Oranges\"}},{\"LabelY\":53,\"LabelX\":37,\"key\":{\"dim0\":\"Apples\"}}],\"xZoomedIn\":false,\"orderedByX\":false,\"showTrails\":false,\"yZoomedIn\":false,\"yZoomedDataMin\":71,\"xZoomedDataMin\":81,\"orderedByY\":false,\"xAxisOption\":\"3\",\"yAxisOption\":\"4\",\"uniColorForNonSelected\":false,\"duration\":{\"timeUnit\":\"Y\",\"multiplier\":1},\"time\":\"2009\",\"yLambda\":1,\"xZoomedDataMax\":111,\"dimensions\":{\"iconDimensions\":[\"dim0\"]},\"colorOption\":\"2\",\"playDuration\":15000}";

chart.draw(data,options);

}

Maybe this post can help others avoid the blank look I had on my face as I kept staring at a blank page in my browser.